Segment Image Using Flood Fill

SUMMARY

Segment Image Using Flood Fill performs flood fill segmentation.

Flood fill segmentation starts from a seed point and fills connected regions with similar color values. This method is useful for filling regions, removing noise, and segmenting objects when you know a point inside the target region.

Use this Skill when you want to segment connected regions starting from a known seed point.

The Skill

from telekinesis import cornea

result = cornea.segment_image_using_flood_fill(

image=image,

seed_point=(0, 0),

tolerance=10)Example

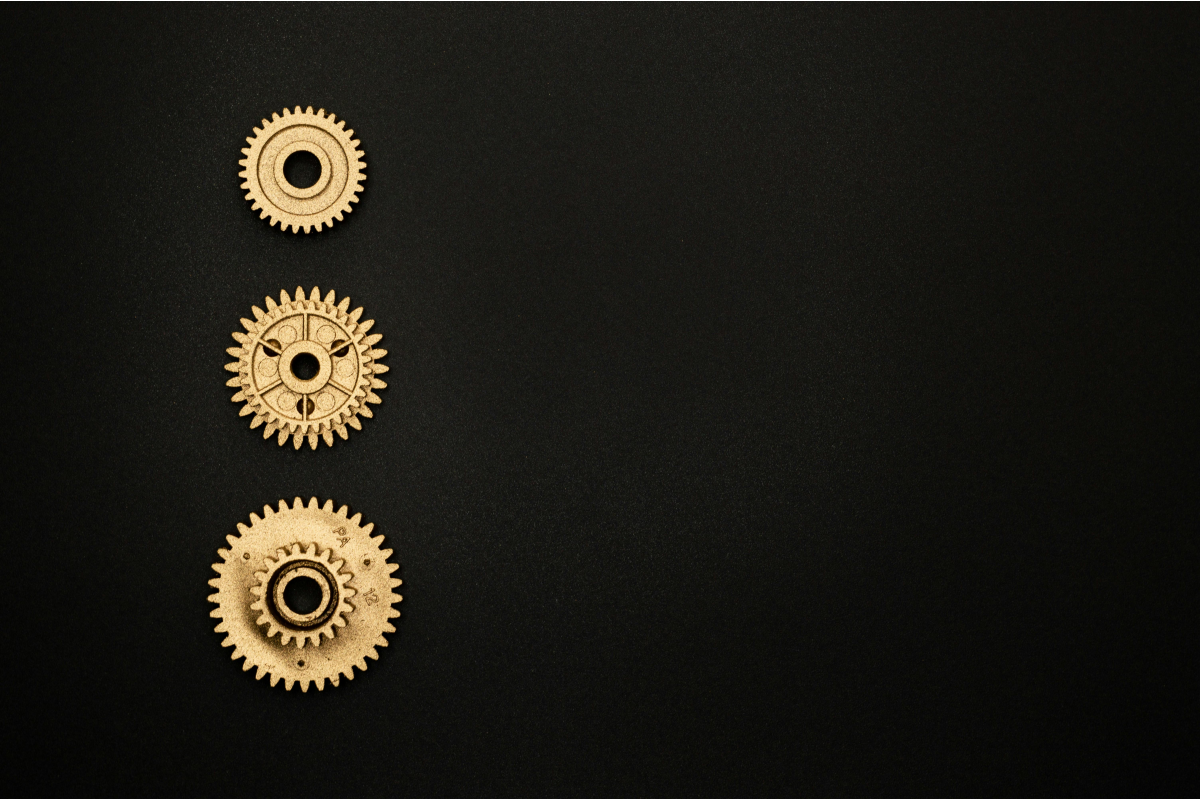

Input Image

Original image for flood fill segmentation

Output Image

Segmented region using flood fill from seed point

The Code

import pathlib

from telekinesis import cornea

from datatypes import io

DATA_DIR = pathlib.Path("path/to/telekinesis-data")

# Load image

filepath = str(DATA_DIR / "images" / "erode.jpg")

image = io.load_image(filepath=filepath)

# Perform flood fill segmentation

result = cornea.segment_image_using_flood_fill(

image=image,

seed_point=(0, 0),

tolerance=10,

)

# Access results

annotation = result["annotation"].to_dict()

mask = annotation['labeled_mask']The Explanation of the Code

Flood fill segmentation starts from a seed point and fills all connected pixels that are within a tolerance of the seed point's color. This is useful for filling regions or segmenting objects when you know a point inside the target.

The code begins by importing the required modules and loading an image:

import pathlib

from telekinesis import cornea

from datatypes import io

DATA_DIR = pathlib.Path("path/to/telekinesis-data")

filepath = str(DATA_DIR / "images" / "erode.jpg")

image = io.load_image(filepath=filepath)The flood fill parameters are configured:

seed_pointis the starting point(x, y)for the flood fill operationtoleranceis the color tolerance for determining which pixels to fill

result = cornea.segment_image_using_flood_fill(

image=image,

seed_point=(0, 0),

tolerance=10,

)The function returns a dictionary containing an annotation object in COCO panoptic format. Extract the mask as follows:

annotation = result["annotation"].to_dict()

mask = annotation['labeled_mask']Running the Example

Runnable examples are available in the Telekinesis examples repository. Follow the README in that repository to set up the environment. Once set up, you can run this specific example with:

cd telekinesis-examples

python examples/cornea_examples.py --example segment_image_using_flood_fillHow to Tune the Parameters

The segment_image_using_flood_fill Skill has 2 parameters:

seed_point (default: (0, 0)):

- Starting point for flood fill as

(x, y)tuple - Units: Pixel coordinates

- Adjust to point inside the target region

- Can be Points2D, np.ndarray, list, or tuple

tolerance (default: 10):

- Color tolerance for flood fill

- Units: Pixel intensity difference

- Increase to fill larger regions with color variation

- Decrease for more precise filling

- Typical range: 1-50

TIP

Best practice: Use a color picker or click detection to determine the seed point coordinates. Adjust tolerance based on color variation within your target region.

Where to Use the Skill in a Pipeline

Segment Image Using Flood Fill is commonly used in the following pipelines:

- Interactive segmentation - When user clicks on a region

- Background removal - Fill background from edge seed points

- Region filling - Fill holes or gaps in segmented regions

- Connected component extraction - Extract specific connected regions

A typical pipeline for interactive segmentation looks as follows:

from telekinesis import cornea

from datatypes import io

# 1. Load the image

image = io.load_image(filepath=...)

# 2. Get seed point from user click or detection

seed_point = (x, y) # From user interaction

# 3. Perform flood fill segmentation

result = cornea.segment_image_using_flood_fill(

image=image,

seed_point=seed_point,

tolerance=10,

)

# 4. Process filled region

annotation = result["annotation"].to_dict()

mask = annotation['labeled_mask']Related skills to build such a pipeline:

load_image: Load images from disksegment_image_using_watershed: Alternative region-based segmentation

Alternative Skills

| Skill | vs. Segment Image Using Flood Fill |

|---|---|

| segment_image_using_watershed | Watershed uses markers. Use watershed for multiple regions, flood fill for single region from seed point. |

| segment_image_using_grab_cut | GrabCut is more sophisticated. Use GrabCut for complex segmentation, flood fill for simple region filling. |

When Not to Use the Skill

Do not use Segment Image Using Flood Fill when:

- You don't know a seed point (Use other segmentation methods)

- You need to segment multiple disconnected regions (Use watershed or other methods)

- Regions have high color variation (May require higher tolerance or different method)

TIP

Flood fill is particularly useful for interactive applications where a user can click on a region to segment it.