Cornea

Segment images using classical or deep learning models of your choice

Status: Released

Any robot. Any task. One Physical AI platform.

Telekinesis Agentic Skill Library for Computer Vision, Robotics and Physical AI applications

The Telekinesis Agentic Skill Library is the first large-scale Python library for building agentic robotics, computer vision, and Physical AI systems. It provides:

The library is intended for robotics, computer vision, and research teams that want to:

Get started immediately with the Telekinesis Agentic Skill Library (Python 3.11 or 3.12):

pip install telekinesis-ai💡 TIP

A free API_KEY is required.

Create one at platform.telekinesis.ai ↗.

See the Quickstart ↗ for more details.

Join our Discord community to add your own skills and be part of the Physical AI revolution!

A Skill is a reusable operation for robotics, computer vision, and Physical AI. Skills span 2D/3D perception (6D pose estimation, 2D/3D detection, segmentation, and image processing), motion planning (RRT*, motion generators, trajectory optimization), and motion control (model predictive control, reinforcement learning policies). Skills can be chained into pipelines to build real-world robotics applications.

Below are examples of what a Skill looks like:

Example 1: calculate_point_cloud_centroid. This skill calculates the centroid of a 3D point cloud.

# Example 1

from telekinesis import vitreous # Import Vitreous - 3D point cloud processing module

# Executing a 3D point cloud Skill

centroid = vitreous.calculate_point_cloud_centroid( # Executing Skill - `calculate_point_cloud_centroid`

point_cloud=point_cloud

)Example 2: filter_image_using_gaussian_blur. A simple skill to apply Gaussian blur on an image.

# Example 2

from telekinesis import pupil # Import Pupil - 2D image processing module

# Executing a 2D image processing Skill

blurred_image = pupil.filter_image_using_gaussian_blur( # Executing Skill - `filter_image_using_gaussian_blur`

image=image,

kernel_size=7,

sigma_x=3.0,

sigma_y=3.0,

border_type="default",

)Skills are organized in Skill Groups. Each Skill Group can be imported easily from the telekinesis library:

from telekinesis import vitreous # point cloud processing skills

from telekinesis import retina # object detection skills

from telekinesis import cornea # image segmentation skills

from telekinesis import pupil # image processing skills

from telekinesis import illusion # synthetic data generation skills

from telekinesis import iris # AI model training skills

from telekinesis import neuroplan # robotics skills

from telekinesis import medulla # hardware communication skills

from telekinesis import cortex # Physical AI agentsfrom telekinesis import corneafrom telekinesis import pupilSee all the Pupil Skills.

from telekinesis import vitreousSee all the Vitreous Skills.

from telekinesis import retinafrom telekinesis import illusionfrom telekinesis import irisfrom telekinesis import neuroplanfrom telekinesis import cortexRecent advances in LLMs and VLMs, including systems such as LLama 4, Mistral, Qwen, Gemini Robotics, RT-2, π₀, world models, and Dream-based VLAs have shown the potential of learned models to perform semantic reasoning, task decomposition, and high-level planning from vision and language inputs.

In the Telekinesis library, a Physical AI Agent, typically a Vision Language Model (VLM) or Large Language Model (LLM), autonomously interprets natural language instructions and generates high-level Skill plans. In autonomous Physical AI systems, Agents continuously produce and execute Skill plans, allowing the system to operate with minimal human intervention.

To learn more about building the Telekinesis Physical AI Agents, explore Cortex.

Telekinesis Agentic Skill Library Architecture

Flow Overview

To understand the architectural motivations and system design of Telekinesis, read the Introduction.

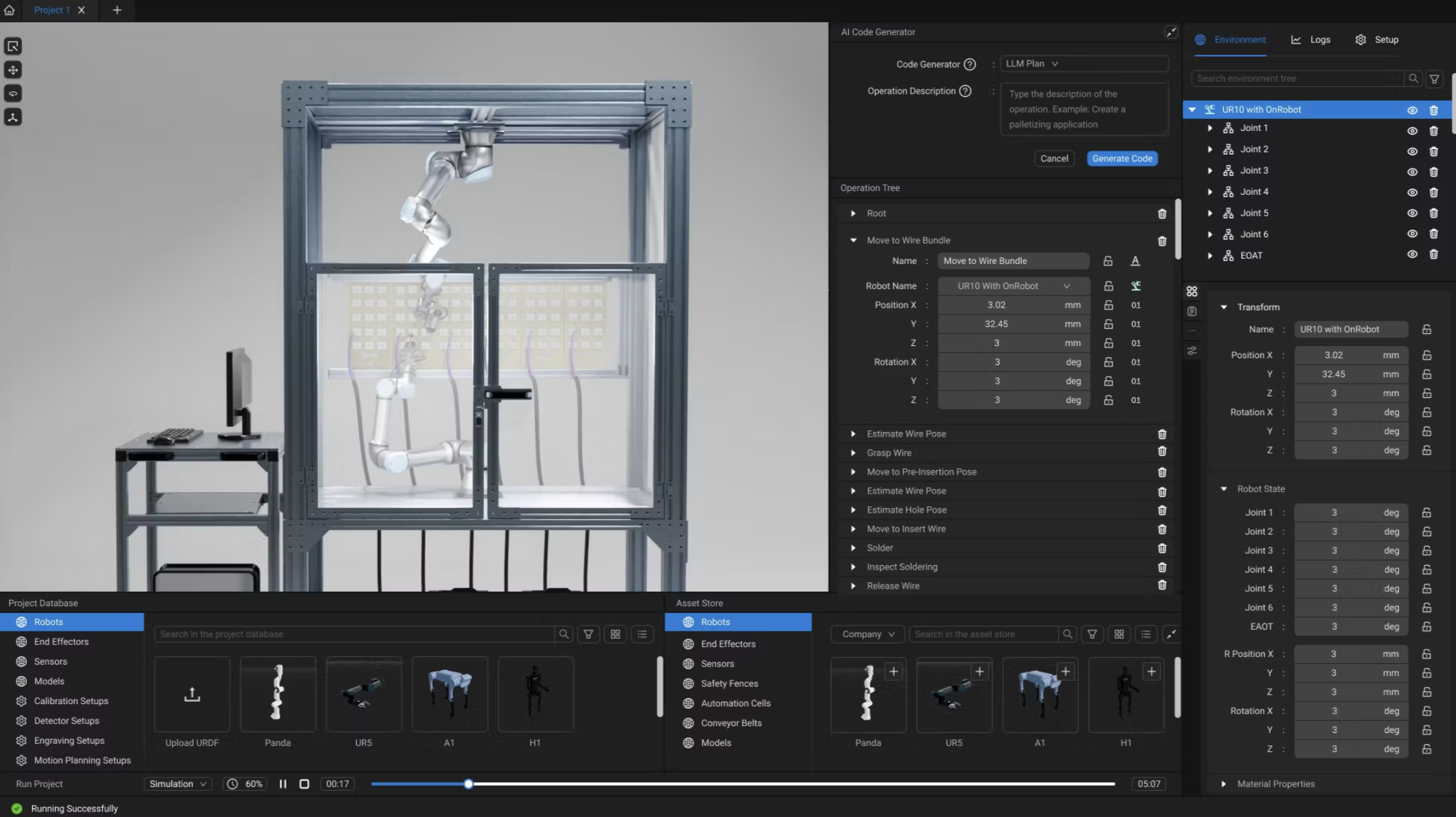

Automated relay soldering powered by Physical AI

Telekinesis Agentic Skill Library helps you build real-world robotics and Physical AI applications for industries such as manufacturing, automotive, aerospace and others. We present some use cases in manufacturing which the Telekinesis team has already deployed using the skill library.

Automated Basil Harvesting

Build vision-based manipulation systems for agricultural robotics that operate reliably in unstructured outdoor environments.

With Telekinesis, detect plants, estimate grasp poses, and execute adaptive, closed-loop motions to safely handle delicate objects.

Carton Palletizing

Create vision-guided robotic palletizing systems that adapt dynamically to changing layouts and product variations in industrial automation environments.

Telekinesis enables object detection, pose estimation, and motion planning to work together for accurate placement across varying pallet configurations.

Lights-Out Laser Engraving

Develop fully autonomous robotic engraving systems using vision-based alignment and closed-loop execution.

With vision-based alignment and closed-loop execution, Telekinesis enables precise laser engraving even when part placement and orientation vary.

Automated Assembly

Build multi-step robotic assembly systems that combine task planning, coordinated manipulation, and precise motion execution.

Telekinesis allows perception, task coordination, and motion execution to be integrated into a single end-to-end assembly system.

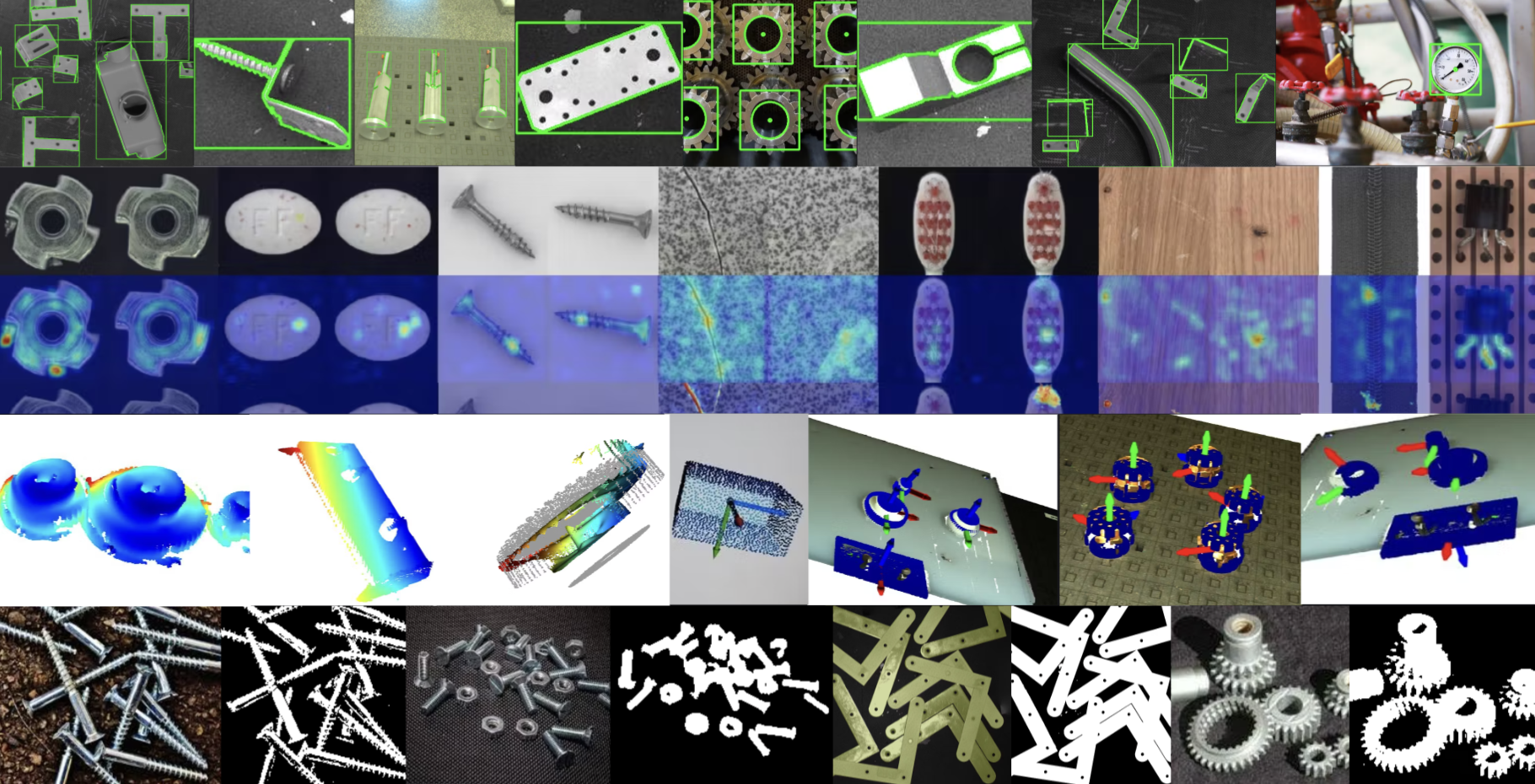

Vision-Based Quality Control

Implement vision-based quality control and inspection pipelines using industrial computer vision for reliable defect detection and dimensional verification.

Using vision-based perception components, parts can be analyzed for surface defects, dimensional accuracy, or inconsistencies with repeatable results.

Start building your own use cases swiftly (Python 3.11 or 3.12):

pip install telekinesis-ai💡 TIP

A free API_KEY needs to be obtained from platform.telekinesis.ai ↗.

See the Quickstart ↗ for more details.

One of the biggest pains of robotics is that each robot provider has their own interface to control their robots. We offer a skill group called neuroplan that provides a unified interface to control any industrial, mobile, or humanoid robot.

We currently support: Universal Robots (real & simulation), KUKA (real & simulation), ABB (real & simulation), Franka Emika (real & simulation), Boston Dynamics (simulation), Anybotics (simulation), Unitree (simulation).

from telekinesis import neuroplan # robotics skillsPrototype on any robot, perform any task on the same platform, and deploy the same Skill Groups anywhere - any robot, any task, on one Physical AI platform.

Learn more about the offered robotic skills in Neuroplan Overview.

Telekinesis Agentic Skill Library supports industrial, mobile and humanoid robots

The library offers production-grade computer vision Skill Groups for object detection, segmentation, pose estimation, synthetic data generation and AI model training.

from telekinesis import vitreous # point cloud processing skills

from telekinesis import retina # object detection skills

from telekinesis import cornea # image segmentation skills

from telekinesis import pupil # image processing skills

from telekinesis import illusion # synthetic data generation skills

from telekinesis import iris # AI model training skills

from telekinesis import medulla # sensor interface skillsFurthermore, we offer medulla which is a unified interface to cameras such as: Zivid, Mechmind, Microsoft Kinect, and others. Learn more in the Medulla Overview.

2D Image Processing, Object Detection and Segmentation

Build reusable 2D vision pipelines using Pupil for low-level image processing, Retina for object detection, and Cornea for segmentation and mask generation.

These Skill Groups can be composed into standalone perception pipelines that operate on images, video streams, or sensor data—without requiring robot motion or control in the loop.

3D Point Cloud Processing & Mesh Generation

Develop geometric alignment pipelines using Vitreous to register point clouds or meshes against reference models or scenes.

Vitreous provides reusable registration Skills—such as ICP-based alignment and global registration—enabling precise localization, model-to-scene matching, and change detection in industrial inspection workflows.

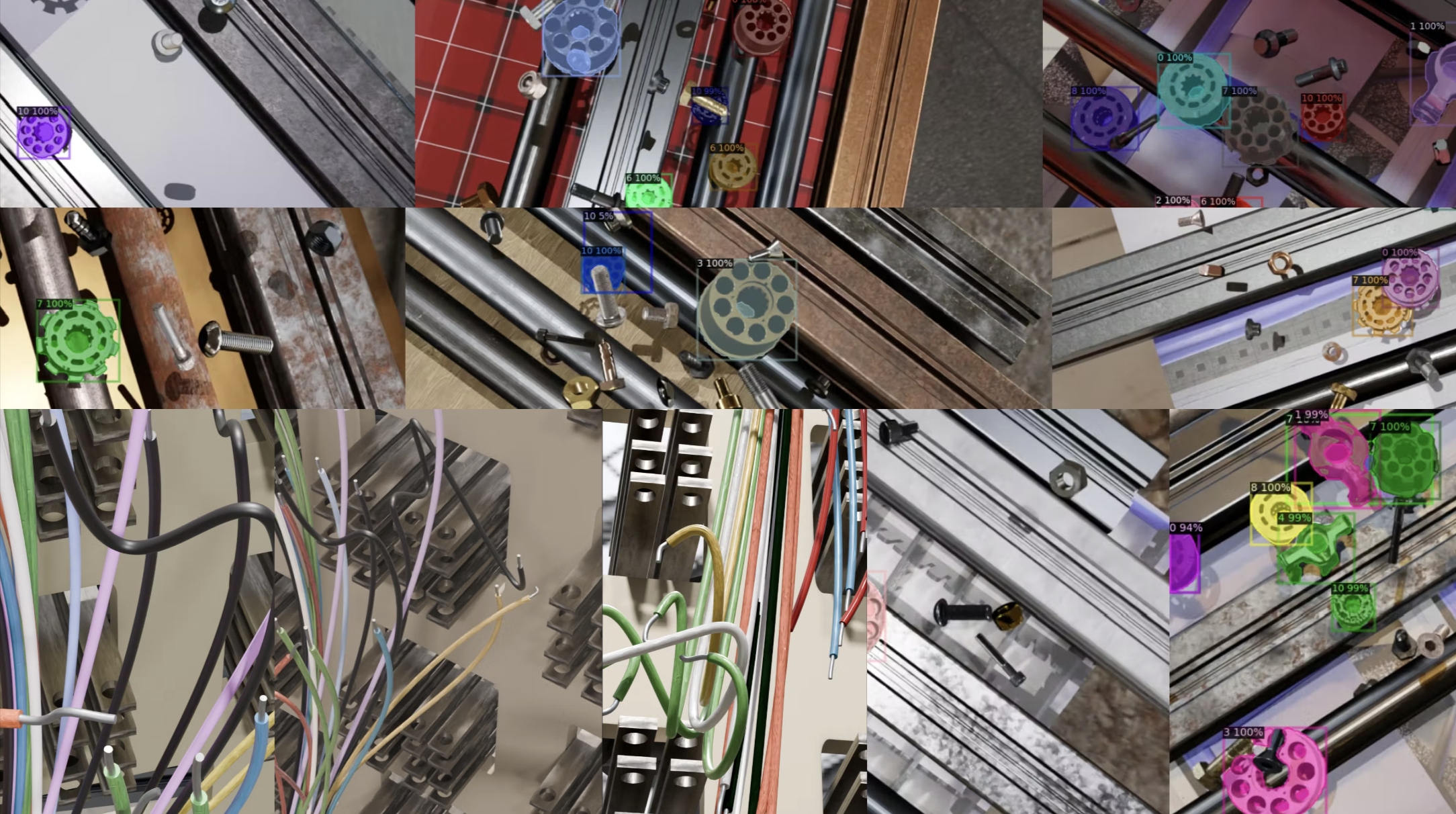

3D Object Detection & 6D Pose Estimation

Create 3D object detection and 6D pose estimation pipelines by combining Retina for object detection with Vitreous for point cloud filtering, registration, and geometric pose estimation.

These Skill Groups enable object localization from point clouds or multi-view sensor data for downstream tasks such as grasp planning, inspection, and vision-guided manipulation.

Synthetic Data Generation & AI Model Training

Generate photo-realistic synthetic image datasets for training object detection, segmentation, and classification models using the Illusion skill group.

Train state-of-the-art AI models in the cloud on generated datasets and deploy them to real-world systems using the Iris skill group.

Brainwave is the Telekinesis Physical AI cloud platform for managing skill orchestration, simulation, digital twins, and robot deployments from a single system. It enables agent-based robotics systems to be developed, deployed, and operated at scale across heterogeneous robots and tasks.

A first look at Brainwave - coming soon.

Develop and simulate digital twin workflows to validate, stress-test, and optimize Skill Groups. Deploy the same Skill Groups to real-world robots using a simulation-to-real transfer pipeline.

CNC Machine Tending

Pick and Place

Surface Polishing

Robotic Welding

Metal Palletizing

Palletizing

You can easily integrate Telekinesis Agentic Skill Library into your own application. Setup the library in just 4 steps and start building!

Since all the skills are hosted on the cloud, to access them securely, a free API key is needed. Create a Telekinesis account and generate an API key for free: Create a Telekinesis account!

Store the key in a safe location, such as your shell configuration file (e.g. .zshrc, .bashrc) or another secure location on your computer.

Export the API key as an environment variable. Open a terminal window and run below command as per your OS system.

Replace <your_api_key> with the one generated in Step 1.

export TELEKINESIS_API_KEY="<your_api_key>"setx TELEKINESIS_API_KEY "<your_api_key>"WARNING

For Windows, after running setx, restart the terminal for the changes to take effect.

The Telekinesis SDK uses this API key to authenticate requests and automatically reads it from your system environment.

We currently support Python versions - 3.11, 3.12. Ensure your environment is in the specified Python version.

Install the core SDK using pip:

pip install telekinesis-aitelekinesis-examples repository from Github with:git clone --depth 1 --recurse-submodules --shallow-submodules https://github.com/telekinesis-ai/telekinesis-examples.gitINFO

This also downloads the telekinesis-data repository, which contains sample data used by the examples. You can replace this with your own data when using Telekinesis in your own projects. Download time may vary depending on your internet connection.

telekinesis-examples:cd telekinesis-examplespip install numpy scipy opencv-python rerun-sdk==0.27.3 logurusegment_image_using_sam example:python examples/cornea_examples.py --example segment_image_using_samIf the example runs successfully, a Rerun visualization window will open showing the result.

INFO

Rerun is a visualization tool used to display 3D data and processing results.

If you zoom out, the Telekinesis Agentic Skill Library and Brainwave are just the beginning.

OUR VISION

Our vision is to build a vibrant community of contributors who help grow the Physical AI Skill ecosystem.

We want you to join us. Maybe you’re a researcher who just published a paper and built some code you’re proud of. Maybe you’re a hobbyist tinkering with robots in your garage. Maybe you’re an engineer tackling tough automation challenges every day. Whatever your background, if you have a Skill, whether it’s a perception module, a motion planner, or a clever robot controller, we want to see it.

The idea is simple: release your Skill, let others use it, improve it, and see it deployed in real-world systems. Your work could go from a lab or workshop into factories, helping robots do things that were previously too dangerous, repetitive, or precise for humans.

Our vision is about building something practical, reusable, and meaningful. Together, we can make robotics software accessible, scalable, and trustworthy. And we’d love for you to be part of it.

Join our Discord community to be part of the Physical AI revolution!

Explore all the features of Telekinesis Agentic Skill Library with Vitreous!